3. Experimenting with a Genetic Algorithm

Having seen the GA, it is now time to tinker with one to see what happens

3.1. Plot

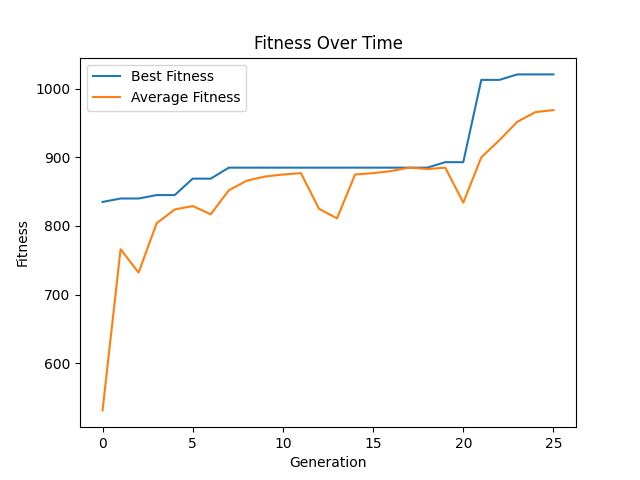

Below is a plot of the learning curve of the evolutionary search

One line shows the fitness of the best candidate solution within the population

The other line shows the average fitness of the population

Plot of a the GA’s “learning curve” for the maximization of the integer value of an unsigned binary number.

The evolutionary algorithm was run with the following settings

18BIT_STRING_LENGTH = 10

19POPULATION_SIZE = 10 # Must be multiple of two

20TOURNAMENT_SIZE = 2

21GENERATIONS = 25

22CROSSOVER_RATE = 0.70

23MUTATION_RATE = 0.10

3.2. Experiment

For all the following questions, take the time to run the GA with several values and plot the results

Note

The easiest way to get the code up and running is to fork the github repository, download it, and run the setup described in the “README”.

Alternatively, the scripts can be downloaded individually to /src, but this may require some additional setup for

the dependencies. It is recommended to make a virtual environment

and download any required dependencies with pip.

Increase the difficulty of the problem by increasing the bitstring length \(n\) (

BIT_STRING_LENGTH)Change the number of generations (

GENERATIONS) to see how it impacts the searchChange the population size (

POPULATION_SIZE) to see how it impacts the searchChange the crossover rate (

CROSSOVER_RATE) to see how it impacts the searchBe sure to include a value of

0.0and1.0in your experimenting

Change the mutation rate (

MUTATION_RATE) to see how it impacts the searchBe sure to include a value of

0.0and1.0in your experimenting

Change the size of the tournament for selection (

TOURNAMENT_SIZE) to see how it impacts the searchSet the size of the tournament for selection (

TOURNAMENT_SIZE) to the population sizeWhat does this mean?

Set the size of the tournament for selection (

TOURNAMENT_SIZE) to1What does this mean?

Add elitism to the algorithm

Elitism is when the top \(x\) candidate solutions are automatically copied to the subsequent generation

Typically, \(x\) is kept low (like 1), but try larger values to see how it impacts the search

What is the best settings of the hyperparameters found?

3.3. A Change of Perspective

In the previous topic, the following candidate solutions were ranked by how close they are to the optimal solution

\(1111111111\) (1023)

\(1011001001\) (713)

\(0001111111\) (127)

\(0000000000\) (0)

This ranks them based on what the candidate solutions mean in terms of the problem’s objective — their integer value

The bitstring that means 713 is better than the one that means 127 since it is closer to the optimal solution

However, instead of thinking about their integer value, consider how close the chromosome is to the optimal solution

The optimal chromosome would be

[1, 1, 1, 1, 1, 1, 1, 1, 1, 1]where \(n=10\)Which chromosome is better

[1, 0, 0, 0, 0, 0, 0, 0, 0, 0](512)[0, 1, 1, 1, 1, 1, 1, 1, 1, 1](511)

Before, the candidate solutions were ranked based on what they mean in the problem space

Phenotype space

By counting the number of ones in the chromosomes, the solutions can be compared based on their encodings

Genotype space

Below is an alternative fitness function that could be used

40def ones_fitness(chromosome: list) -> int:

41 """

42 Count the number of 1s (ones) in the chromosome (bitstring).

43

44 :param chromosome: Chromosome (bitstring) to evaluate

45 :return: The number of 1s (ones) in the chromosome (bitstring).

46 :raises ValueError: If the chromosome is empty

47 """

48 if len(chromosome) == 0:

49 raise ValueError(f"Empty chromosome cannot be evaluated: {chromosome}")

50 number_of_ones = 0

51 for bit in chromosome:

52 number_of_ones += bit

53 return number_of_ones

3.4. Experiment Again

Switch out the use of

value_fitnessforones_fitnessin the fitness calculation of each chromosomePlay with the hyperparameters and keep track of the best settings found

Are they different from before?

3.5. Non-Numerical Hyperparameters

The hyperparameters can be tuned, but so can the individual functional pieces of the GA

There is no rule saying the fitness function must work a certain way

There is no set way to do crossover or mutation

Selection can be done however one wants to

…

All that really matters is that it produces a working GA in the end that finds high-quality solutions to a problem

The ideas of evolutionary computation are truly only rough frameworks

The individual concrete pieces are flexible and can be whatever works

Try to create your own crossover function

Try to create your own mutation function

Try to create your own selection strategy

Did your changes improve the GA or not?

They do not need to

Just have fun and try to be creative

3.6. For Next Class

If not already done, fork the course github repository